Operating System Objectives regarding Memory Management

- To keep track to how much memory is in use and what parts of it is

- To allocate and de-allocate memory between processes

- To move data to storage when memory is at capacity

- To communicate to the application that there is enough memory available even when there isn't. The reason this is done is so that processes can be executed even when the physical memory available is low.

- The way it's done is, operating system creates a virtual memory (say 4 GB) and communicates that to the process.

- Each process will have it's own virtual memory address but this address could be the same for another process as well. The location on the physical memory however will be different.

- These virtual addresses are translated to physical address in hardware using MMU and TLB.

5 requirements for memory management

- Protection

- Particularly if one process can access the memory of another process, it could potentially corrupt the data or cause security issues.

- Memory protection is not possible at compilation time.

- Memory protection can be done using CPU support by breaking down memory units into smaller elements called pages (usually 4kb but can be as large as 1mb). These pages then have some properties like 'non-executable bits' and 'memory allocation status'.

- Sharing

- Sometimes memory sharing might be necessary like sharing libraries.

- It could be harder to do with memory protection.

- There are mechanisms available to support memory sharing through 'virtual memory' and 'pages'.

- Relocation

- As new processes are created and old processes are terminated, the physical location of the process on the memory can be changed anytime by the operating system.

- Logical Organization

- Physical Organization

Partitioning Techniques

Memory Partitioning: Memory can be partitioned in different ways depending on the use cases

- Fixed Partitioning : This divides memory into different partitions based on number of processes. One process per partition. It's done when the operating system starts. Some issues here would be when the process is larger than the partition, there might be a loss of memory space. It's typically used in Aircraft systems.

- Dynamic Partitioning : Partitions are created dynamically, it's efficient but could become complex as extra operations are required to reduce external fragmentation. It also requires relocation of memory data.

- Paging : Mostly used for smartphones and laptops. Processes and Memory are partitioned into fixed size chunks. Pages for processes, Frames for memory.

- Segmentation : This is similar to paging. Memory is split into different chunks called segments. Segments can have different sizes. Multiple segments are created to separate programs, stack and data. Segmentation has hardware support on x86 architecture.

- Memory Allocation : Buddy Algorithm

- Virtual Memory : Useful for addressing more memory than is physically available. Meaning, it can be implied to the program or process that more memory is available than is physically available. Each process has its own virtual address space. This adds additional protection to the memory.

Page fault

Page fault occurs when page is stored on disk but not in RAM. The OS is usually called when this occurs. To solve this, the page needs to be fetched from the disk and ‘a page’ needs to be swapped out of the memory to replace it with the fetched page.

- There are 6 algorithms to do page replacement:

- First In, First Out : The page to be swapped out of the memory would be the oldest one. Easy to implement and quick to execute

- Optimal Algorithm : Whenever page fault occurs, the algorithm tries to find a page that will never be referenced (needed by a process) or that might be referenced farther away in the future and replaces that page with the missing page. Best algorithm for page fault reduction.

- Least Recently Used, Non Recently Used : Finds the least recently used page and replaces that with the missing one. Finding least recently used page is a complex operation.

- Clock Algorithms : Pages are kept in a circular format, with one hand of the clock pointing to the last examined page. When a page is replaced, the hand moves one position.

- Stack Algorithms

- Advantages

- Fast communication and synchronisation between processes

- If multiple processes require the same library, it can be loaded once and shared among the processes

- Easy MMU implementation

- Processes themselves can request/setup shared memory region. This can be achieved through paging, by inserting common pages.

Caching

Caching is a hardware mechanism used to speed-up the execution of instructions by the ALU by ensuring that enough instructions are carried out per clock cycle.

- Access to a variable when using virtual memory would be 20x to 100x times slower if not cached

- Various caches in memory

- IL1 - Instruction cache. Separated from data cache to avoid trashing

- L1 - Data cache

- L2, L3, L4 - Uniform data cache, often shared among processes

- TLB

- Micro-op decode cache - Stores micro operations that are decoded instructions

- Branch prediction caches

- Increasing cache associativity, increases cache hit/miss ratio

- Every CPU core has a small cache (L1 cache)

- Cache line : When data is transferred from memory into the cache, it is transferred in blocks of fixed size. These blocks are called cache lines or cache blocks

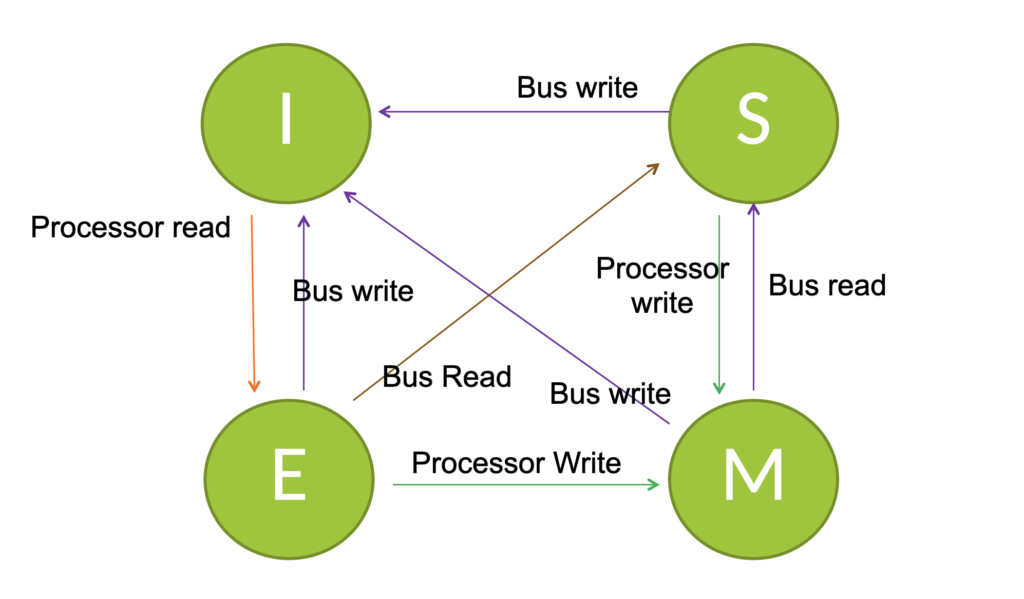

MESI Protocol

- Sometimes information need to be shared between the caches that are in the CPU cores. For example, if you are incrementing a variable using multiple threads, you won’t get an accurate result if the variable is not shared with the other caches after being processed in one CPU core.

- There is a protocol for this sharing mechanism, that’s the MESI protocol

- MESI - Modified. Exclusive. Shared. Invalid. These are the 4 states of a cache line (data block)

- Modified : The cache line (data block) inside the CPU core is present but has been modified and it differs from what is available in main memory and its content is local to the cache.

- Exclusive : Cache line (data block) is only within current cache but has not been modified

- Shared : Cache line (data block) is present but shared with other caches

- Invalid : Content is invalid

Trashing

When data is loaded on to the cache, some data is likely to be evicted (meaning replaced, cos of the small cache size). This usually happens when the memory stream is larger than the cache size. This can happen with data caches, pages, TLB caches, etc.

What is a Heap?

Heap stores dynamic variables that are allocated dynamically (as required) when the program runs. When the program is compiled, there might be some varibles which can be know explicitly to be required by the program such as global variables. Even these are stored in the heap.

What is a Stack?

Stack is a very small memory area, usually around 4 kilobytes.